Featured Article

Specially crafted & well researched article

GitLab CI for PHP Developers

If you are familiar source code version controlling you may heard about GitLab. GitLab is…

Special Stories

Stories that I love always. Never get old 🙂

Run Symfony 5.x Web App on Docker Container

Symfony is one of the best PHP framework for developers who want to build enterprise…

How to evaluate resume of a Software Engineer

Lots of things has been changed over the times. And now we are living in…

Latest Posts

GitHub Actions/Workflows (CI) for PHP Developer

You must already know about GitHub specially if you are a Software Engineer. Ok, that’s great to know that you know. Now let’s talk about as a PHP developer how we can use GitHub actions or workflows (group of actions) to automate our CI job. It was very much expected feature from GitHub. What is GitHub actions & workflow? You got me right. I need to tell you this first otherwise this article seems useless to you unless you have some basic understanding about GitHub actions and workflow. Well, GitHub actions is a specific action that you want to perform each time anything changes in your repository (codebase). And GitHub workflows is actually a procedures or tasks consists of multiple actions. For example, you developed a PHP library or application. You are pushing code there alone or you are working with other team members. But it’s very difficult to check every commit about who is working what or who pushed some bad codes in your repository. It’s really difficult. So you want to run some automated tests on every commit pushed to your GitHub repository. Do you want to do that manually. Hey John, just write me in Slack that…

GitLab CI for PHP Developers

If you are familiar source code version controlling you may heard about GitLab. GitLab is a wonderful ecosystem for any software engineering project to track codes, integrating CI/CD, issue tracking, etc. In recent days GitLab CI became a demanding skills in your resume. So let’s talk about GitLab CI/CD for PHP developers specifically. What is CI? CI is the short form of Continuous Integration. You are continuously and regularly updating your codebase, adding new features by yourself alone or with other team members. CI itself plays a vital role to make sure on every changes it will make sure that nothing bad is happening for that changes. You will configure few configurations or steps or actions to check all are going well whatever code pushed or whatever commit your team members pushed to the repo. So let’s see as a PHP developer how you can run a basic set of tools in your GitLab CI pipelines. I am running a tests stage where on every commit I will push it will run the PHPUnit tests, if it fails it will notify me or mark that commit or if it passes that tests, that means your recent commit is well. GitLab…

Run Symfony 5.x Web App on Docker Container

Symfony is one of the best PHP framework for developers who want to build enterprise grade web application or any web services. Besides Symfony, Docker is one of the most necessary tooling every developers need. Docker containerized your App. So the question is, what can be the best way to run Symfony web application on Docker container? Since Symfony 5.x got released I tested it for one of our applications that I have developed. You have to remember, any kinds of web application requires few services to make it available to the internet or to your audience. Essential parts of any web application As a web developer you should also know this very well. But let me write a list here that will help us to decide later in this article. To run a successful web application, we need – A web server (Nginx or Apache) A Database (MySQL, PostgreSQL, etc) Web application (in PHP, Python, etc) These 3 are essential in most cases. So, how to dockerized Symfony web app? To containerized (a.k.a dockerized) any web application you have to make sure that other services (web server, database) all are inter-connected. In the context of containerization we should also…

Obsessed with Laravel instead of PHP – Only for BD Developers

This article is only for Bangladeshi developers. In recent days I have done some research in the Bangladeshi job market. And I just went crazy to see how it’s doing very bad thing to the community. I just picked PHP related professional industry. I also got some bad experience to see jobs, interviewing some candidates, researching some websites. Mostly in Bangladesh, Laravel has a huge demand in the web development industry. Even developers and companies are seriously (wrongfully) obsessed with Laravel instead of PHP. Sounds funny, right? Yes, it is. Let me explain something later in the article and I am sure that you will feel very BS to see what’s going on. Keep reading the entire article. Laravel & PHP Laravel is a framework written in PHP programming language. So generally best & skilled PHP developers will be good at Laravel. It’s natural. So companies needs to look PHP developer and their PHP related skill, not only Laravel skill. My findings & why it’s BS to be obsessed with Laravel instead of PHP 1. One page simple website built on Laravel I just discovered few one-page website that is built with #Laravel. For example, http://event.mujib100.gov.bd/. Even to develop those one page…

Enable Docker in PhpStorm – Deploy PHP App in Docker

Everybody is talking about Docker this days. Trust me, you should too. It’s the future for running application. Deploying your codes or application in a Docker container is going to be a very popular. So let’s see how to enable Docker in PhpStorm so you can debug your application from PhpStorm to Docker container easily. I am assuming that you already have Docker installed on your machine. Note: in this article I am using Ubuntu OS. So adjust things accordingly. Also you have PHPStorm installed too. If I am correct then read below, otherwise you need to install PhpStorm and Docker both to make this work. First, go to Settings/Preferences (Ctrl + Alt + S), then go to Build, Execution, Deployment | Docker You will see “Connection successful”. But if you do it first time, you may see a “cannot connect to docker daemon at unix ///var/run/docker.sock” error. If you see this error, mostly it’s related to permission. You need to add current Ubuntu user to docker group with the following command. After adding yourself to the docker group, do the configuration step again and your PhpStorm will get connected with your Docker daemon. If you face any trouble to…

JetBrains Space vs Slack – Features Comparison (Free Version)

Productivity is the key of success. It applies in personal life as well as for any businesses it’s true. Everybody struggle to boost productivity. We got Slack for several years for team collaboration in a smarter way. But JetBrains Space seems to be a new king on the market. In this article, I wanted to visualize a feature comparison between JetBrains Space vs Slack (free version). Note: This article will be continued and will be updated regularly while I am still exploring JetBrains Space EAP. Feature comparison – Slack vs JetBrains Space Before moving further down, let me tell you something. JetBrains Space is a very new products released by JetBrains couple days ago. Even it’s not public yet. I was fortunate enough to get access to their EAP (early access program). Based on my findings I am writing this article. As I am still exploring it, I will keep improving this article to keep it’s information up to date. So, let’s go straight to the facts. Messaging Limit When we talk about team collaboration, messaging is a mandatory feature. Slack and JetBrains Space both have that feature. But in this fact, JetBrains Space is giving more than Slack. For…

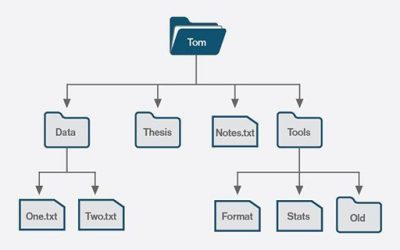

Fscrawler – File System Crawl & Indexing Library

Have you ever thought about to index all of your entire filesystem in a database with file meta info, it’s contents? Or, have you faced any problem that will require you to search and run some queries to find documents or contents from your large file system? It’s a common experience we all face. May be I am right, you face it too. To crawl file system and index all the files, it’s meta info and contents fscrawler is a fantastic library and it’s already very popular among system administrator, DevOps team or whoever manage IT infrastructures. So let’s talk about exactly what is fscrawler. What is fscrawler? With the name I guess you understood it’s purpose. fs (File system) crawl (watch changes, crawl recursively). It’s fscrawler. It’s an open source library actively maintaining in it’s GitHub’s repository. Already it’s very popular among people. If you see their GitHub issues, open PR, etc you will notice that. Also one another important information is David Pilato is the owner of this library and he works in Elasticsearch. I will explain later about why it’s important for a library. Feature – crawling & indexing file system It’s the primary feature of fscrawler.…

Rsync – Useful techniques & usage

I already wrote about rsync 5 years ago in another blog post. But today I am going to write a separate article that will help you to become a master in using rsync. Rsync is one of the most useful tools for system administrator and others who are responsible to maintain DevOps tasks. So it’s worth bookmarking this article for you as a rsync reference. I am not going to write lots of stuff about what it is and why it’s useful. Straight I am going to discuss about it’s usage. First, we need to know some basic command structure for rsync. Command format You need to understand the basics to formate a command for rsync. Common options for rsync Here are some common options for rsync those are most popular and requires for most of the use case. -q, –quiet suppress message output -h, –human-readable display the output numbers in a human-readable format -a, –archive archive files and directory while synchronizing ( -a equal to following options -rlptgoD) -v, –verbose Verbose output -b, –backup take the backup during synchronization -l, –links copy symlinks as symlinks during the sync -r, –recursive sync files and directories recursively -n, –dry-run perform a…

SellCodes – New Platform To Buy & Sell Codes

Last 7 years I had lots of experimental codes that I never released anywhere. Because it was all part of all big projects and practices. But couple months ago I just go introduced with sellcodes.com where I can easily buy and sell codes. Actually anything. Now I am finishing all of my little to big snippets and codes and publishing those in sellcodes.com. Unlike other platforms like ThemeForest and CodeCanyon. SellCodes currently provide very flexible approval process for the developers who want to sell their code and earn money. Earn upto 95% of your total sell. That’s huge. Mostly I fall in love with SellCodes because – You can instantly get approved for your items Payout is 95% Frequent payout Affiliate program No restrictions about the product/service type It allows to run membership programs You can receive recursive payments from your users Very easy integration facility. You can integrate your Sellcodes offer in your website or in any digital platform with a single click Sellcodes for developers If you are a developer and looking for some platform where you can sell your tools, code snippets, themes or plugins or any kinds of digital items. I think Sellcodes can be a…

Add Bootstrap CSS in WordPress Plugin page

I am assuming that you are already familiar with WordPress and Bootstrap CSS framework. We all know and that’s why you came here. Also I guess you are also a WordPress plugin developer. If yes, then this article may save lots of your time to design your WP plugin administrative pages inside WP admin panel if you can use Bootstrap. Let’s see how we can use Bootstrap CSS in WordPress plugin page. Why Bootstrap CSS framework for WP Plugin? Because most of the developer and frontend designers are already familiar with Bootstrap because it’s the most popular CSS framework currently available to use. You can easily design your frontend with it with less work and in less time. Ultimately using Bootstrap CSS will boost your productivity. As a WordPress plugin developer you know that most of the plugin now has their own configuration and administrative page inside WP admin panel. I guess your plugin also has. So now you want to design it with your existing design knowledge. Am I correct? Ok, so here it comes to use Bootstrap CSS framework. What’s the problem? Conflict with WordPress core CSS? Yes. When you just add any third party or extra CSS…

WP Mail Gateway – Send mail via multiple mail gateway

Last couple of years I missed developing plugin for publicly use. I have developed so many WordPress plugin for my clients but not for my name. Just two days ago I have published a brand new WordPress plugin called “WP Mail Gateway”. With the name you can guess about what it does. Do you? Ok, keep reading this article to know more about WP Mail Gateway WordPress plugin and how it can help you. Sending email from WordPress site As a business or professional or personal website or blog, every websites need to send email on few events to someone. I know that and you know that. But sending mail from website is not that much easy. It involves couple of matters. First of all it depends on your server configuration, PHP configuration and CMS (WordPress) configuration. Most of the time we use SMTP as the only way to send email. But the time has been changed, to keep up the deliverability, keeping your domain reputation intact we prefer to use some third party email gateway provider. Most of the provider like Mailgun, Mandrill, Mailjet, Postmark allow you to send limited number of free and paid email by their gateway.…

Finally goodbye to the old Search Console

Google finally said goodbye to it’s old Search Console. On behalf of the Search Console Team Hillel Maoz wrote it in an official blog post today. A goodbye picture has been also posted in that post. It seems that the team is no longer will work for the old one. The new search console was already available couple months even a year. The search console team was migrating all the important features from old search console to new search console. Still there were some features were due. Today I saw that a new section in the new search console “legacy tools & reports”. All the due features just boxed in this section. You can always share your feedback from webmasters community. Legacy tools & reports In this new sections, see the list of features from old search console you may find – International targeting Removals Crawl stats Messages URL parameters Web Tools

Native Lazy Load WordPress plugin by Google

Google never rests to help webmasters for improving web experience. Nearly 34% of the websites is powered by WordPress. Improving website performance is a key responsibilities for most of the webmaster. And now Google developed native lazy load wordpress plugin couple days ago. It will boost overall performance of any WordPress website. As Chrome 76 has been released already with it’s “loading” attribute supported feature, now with this plugin your website can gain much performance improvements with this lazy loading feature. “Native” meaning this lazy loading functionality doesn’t rely on any third party Javascript. It’s just utilize the “loading” attribute for the supported browser. Before this, usually webmasters were used to use third party Javascript for such lazy loading experience for media in their website. But Javascript resource is also heavy and it has a performance cost. So from now on without relying on any 3rd party Javascriptip, with this native lazy loading wordpress plugin you can get tremendous speed improvements for your wordpress site. You also should know that if the browser doesn’t support the latest “loading” attribute yet, this plugin has it’s own fallback mechanism so nothing will be broken in terms of lazy loading. Currently Chrome added…

Content writing idea from Google Discover Feed

What’s the most critical task for a blogger or an SEO expert? It’s writing content. “Content is the king” and we can’t deny it. It feeds Google search engine algorithm and make your site’s ranking healthy. But getting content writing idea is more crucial for everybody. At least everyday before going to sleep I fry my head to get new content ideas. Trust me, it happens to me everyday and every night. I can’t get rid of this because I don’t want to. But since so many years when I had no blog or no place to write, I had a habit (by born). That is “reading”. I love to read so many topics. Daily I read average 30/35 articles. Sometimes my mobile phone keeps crying because so many tabs are always open in my Android phone chrome browser. Why writing content is crucial? Because you need to write something that people wants to read actually. Otherwise there is no value of writing great content on your website. Better you can keep writing arbitrary content in your notebook. But you want to write great article for your website because you want to bring visitor and want them to read your…

Alibaba Cloud Anniversary: It’s 10 Years

That was 10 September 2009 when Alibaba Cloud started their journey and now it’s 2019. 10th Alibaba Cloud anniversary. Everybody so far know about what it is so I don’t need to introduce them as new to you. In the last 10 years, Alibaba claimed that they are now in #3 position as cloud service provider in the world. I didn’t get any accurate ranking about cloud service provider’s ranking but whether it’s in number 3 or not but it already gain a large number of market share in this industry. But why nobody is talking about Alibaba Cloud? That’s a question also for myself. I will try to cover that later. Read this full article that covers everything for it’s 10th Alibaba Cloud anniversary. Cloud market share for Alibaba Cloud In this 10th Alibaba Cloud anniversary I tried to find their market share in public cloud service industry. According to Gartner IT Service Report 2018 (IaaS and IUS Market Share) Alibaba Cloud is number 1 cloud provider in APAC (Asia Pacific). But that doesn’t bring them in top worldwide. Amazon, Microsoft, Google, Alibaba & IBM are playing major roles as cloud service provider in this competitive landscape. In the…

Read all articles

All the useful and important articles are ready for you.